Introduction

Cervical cancer, predominantly initiated by persistent human papillomavirus (HPV) infection acquired through sexual contact, is a formidable global health challenge. Human papillomavirus is the primary aetiological factor, responsible for 12% of all malignancies and ranking as the second leading cause of death among women worldwide [1]. This association between HPV and cervical cancer is particularly pronounced, with certain types of HPV being categorised as high-risk due to their potential to trigger cervical cancer development. Among these high-risk types, HPV-16 and HPV-18 have emerged as prominent culprits, leading to persistent infections that significantly contribute to the onset of cervical cancer [2].

The impact of HPV infection on cervical cancer risk is further exacerbated in women living with HIV, who face a six-fold increase in susceptibility to cervical cancer compared with their HIV-negative counterparts. This underscores the intricate interplay between HPV and immunocompromised states, emphasising the urgent need to address cervical cancer risk in vulnerable populations [1].

Human papillomavirus, a diverse group of viruses with the capacity to infect not only the genital area, but also the mouth and throat, primarily spreads through sexual contact. Despite the prevalence of HPV exposure in sexually active populations, the immune system typically clears the infection in most cases. However, persistent infections may occur when the immune system fails to eliminate the virus, thus paving the way for the development of abnormal cervical cells. Progression from persistent infection to cervical cancer is a well-established continuum that underscores the critical importance of timely detection and intervention [2].

This research article delves into the crucial nexus between HPV infection and cervical cancer, exploring avenues to enhance understanding, detection, and, ultimately, the prevention of this devastating disease. By investigating novel methodologies for HPV classification in colposcopy images, this study aimed to provide valuable insights into the existing body of knowledge in medical imaging and cancer detection, addressing a notable gap in current research endeavours.

Cervical cancer is strongly associated with certain types of HPV. Human papillomavirus is a group of related viruses that can infect the genital area as well as the mouth and throat. Among the many types of HPV, some are considered high risk because they can lead to the development of cervical cancer. The link between HPV and cervical cancer is well established. Persistent infection with high-risk HPV types, particularly HPV-16 and HPV-18, is the leading cause of cervical cancer. Human papillomavirus is primarily transmitted through sexual contact, and most sexually active individuals are exposed to the virus at some point in their life. However, in most cases, the immune system clears the infection. Persistent infection can occur when the immune system fails to eliminate the virus. Over time, this can lead to the development of abnormal cells in the cervix, which can progress to cervical cancer if not detected and treated [3].

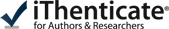

Preventive measures, including the administration of HPV vaccines, are advised to reduce the risk of cervical cancer associated with HPV in both males and females. Vaccines targeting the prevalent high-risk genotypes of HPV, namely HPV-16 and HPV-18, confer protection against the disease and substantially reduce the risk of developing cervical cancer. Cervical cancer screening, which encompasses HPV testing and Pap examinations, improves the efficacy of treatment by facilitating early detection of cervical abnormalities. Consistent gynaecological screening and prompt medical attention in response to unusual symptoms or test results can aid in the detection and treatment of cervical abnormalities and cancer in their early stages. The public must understand the connection between HPV and cervical cancer if they are motivated to participate in screening programs and take preventative measures. To mitigate the potential for HPV-associated cervical cancer, it is imperative to maintain regular communication with healthcare professionals and rigorously adhere to prescribed vaccination and screening protocols. Some samples depicted in Figure 1 tested positive or negative for HPV.

Various techniques are employed to detect HPV, including biopsies, RNA and DNA testing, visual examinations using acetic acid or Lugol’s iodine, and Pap smears. A healthcare professional uses a spatula or brush to collect cells from the cervical region during a Pap smear. The collected cells are examined for irregularities under a microscope. Standard screening may potentially incorporate an HPV DNA test, which examines cell samples concurrently with cervical cancer risk assessment for high-risk HPV DNA. As an indicator of viral replication, the presence of active HPV infection in the cells was ascertained by RNA detection. Visual investigation using acetic acid or Lugol’s iodine is feasible in contexts where resources are limited. The application of a solution to the cervix may induce discernible alterations in colour or texture, which may indicate the existence of abnormalities. Biopsies are performed when abnormalities are detected during the screening process. Collecting a tissue sample from the site of interest (e.g. the cervix) for microscopic examination is the initial step. The primary objective of this test is to assess the existence of HPV-associated abnormalities and provide comprehensive data regarding their severity. Early detection and prevention of cervical cancer are dependent on screening procedures.

Numerous variables have the potential to impact cervical cancer screening methods, including the Pap smear and HPV testing. An absence of understanding regarding the importance of screening and the correlation between HPV and cervical cancer may contribute to hesitancy. Fear, anxiety, distress, unease, and potential for cancer diagnosis are typical responses to cancer screening. Avoidance behaviours can be impacted by prevailing cultural and community norms, in addition to systemic taboos concerning reproductive health. Sexually transmitted infections may cause individuals to be reluctant to seek treatment due to concerns such as stigma, humiliation, and invasion of privacy. Obstacles to the timely delivery of screening services may include financial limitations, geographical restrictions, and scarcity of healthcare services. Frequent misunderstandings regarding the screening procedure and its objectives can cause some to forsake it. Additionally, individuals may become complacent if they believe that they are not at risk. Education, public health campaigns, and community outreach programs should be implemented to address these issues. This is essential for increasing awareness, refuting misconceptions, enhancing early detection and prevention programs, and increasing the cervical cancer screening rates.

Because of significant limitations in infrastructure, resources, and financial means, the installation of cervical cancer screening apparatus in every healthcare facility situated in remote regions is not viable. Thus, colposcopy represents the most pragmatic and effective approach for establishing economical screening initiatives for HPV detection. To accurately diagnose cervical cancer in remote rural areas, paramedical professionals must be able to identify HPV using simplified, practical, and readily comprehensible methods. Consequently, an automated method is necessary for HPV detection in cervical cancer patients.

The major contributions of this study are as follows:

colposcopy images were utilised in a novel manner to classify HPV, as described in this study. Detection of cervical cancer, the second most prevalent malignancy among women worldwide, is the primary objective of this approach. This is an innovative method for classifying HPVs,

by investigating the uncharted domain of HPV-based colposcopy image identification in the context of cervical cancer, this study fills a substantial void in the existing body of knowledge. This novel perspective contributes to our understanding of the potential applications of medical imaging in the context of cervical cancer screening,

low-income and underdeveloped regions can benefit from colposcopy screening, according to the study, because it does not require biopsy specimens and only requires a compact, cost-effective setup. Within healthcare systems characterised by resource constraints, this attribute underscores the potential for extensive integration,

within the methodological framework, feature extraction and robust dataset augmentation were performed using the EfficientNetB0 architecture. An exceptionally precise and effective classification system was generated through the experimental integration of 19 distinct convolutional neural network (CNN) architectures, followed by fine-tuning using the fine κ-nearest neighbour (KNN algorithm),

the proposed technique shows remarkable performance in classification, as shown by its validation accuracy of 99.9% and area under the curve (AUC) of 99.86%. The system maintained strong performance when evaluated using test data, achieving an accuracy of 91.4% and an AUC of 91.76%. The efficacy of the integrated approach is substantiated by these remarkable outcomes, which provide a reliable methodology for HPV classification in colposcopy images; this has the potential to enhance cervical cancer diagnosis on a global scale.

Material and methods

Related work

Numerous investigations have significantly advanced the domain of computer-aided diagnosis (CAD) in cervical pathology by employing distinct methodologies to enhance the precision of lesion identification and classification. Zhang et al. [4] introduced an innovative CAD technique targeted at automatically identifying cervical precancerous lesions, with a particular emphasis on distinguishing cervical intraepithelial neoplasia 2 (CIN2) or higher-level lesions in cervical images. Their methodology incorporated a comprehensive pre-processing stage encompassing data augmentation and extraction of regions of interest. A remarkable accuracy of 73.08% and an AUC of 0.75% were attained on a test set comprising 600 images through the utilisation of pre-trained DenseNet convolutional neural networks to fine-tune the parameters of each layer. To enhance the diagnostic efficiency of positive low-grade squamous intraepithelial conditions such as cervical cancer and CIN, Li et al. [5] examined the application of time-lapse colposcopic images. Utilising feature fusion and key-frame feature-encoding networks, they constructed their system. The aforementioned networks encoded the characteristics extracted from colposcopic images captured prior to and at various points during the acetic acid test. The researchers achieved a noteworthy classification accuracy of 78.33% using a graph convolutional network with edge features to process a massive dataset comprising 7668 images. Yu et al. [6] introduced an innovative approach to the analysis of colposcopy images by incorporating time-series parameters. The researchers achieved a sensitivity of 95.68%, specificity of 987.22%, and accuracy of 96.72% with their gated recurrent convolutional neural network (C-GCNN) when integrating multistate cervical images for CIN grading. To enhance the DenseNet model’s performance in classifying cervical cancer through the utilisation of colposcopy images, Saini et al. [7] developed ColpoNet. Their framework achieved an accuracy of 81.353% when applied to a dataset consisting of 400 CIN1 and 400 CIN2/CIN3/CIN4 images. Xu et al. [8] resolved the issue of image preprocessing for the classification of cervical neoplasia through the implementation of a two-way augmentation technique. The methodology successfully categorised cervical lesions with an impressive 82.31% accuracy using an optimised support vector machine and CNN models. Cho et al. [9] classified neoplasms in 5 ways and pre-processed images of considerable complexity on a heterogeneous dataset. By implementing ResNet-15, they achieved notable advancement in the discipline, attaining an accuracy rate of 51.7%.

Although these studies have significantly advanced the state of the art in colposcopy image analysis, it is noteworthy that the existing literature predominantly focuses on the classification of various cancer types. Surprisingly, there is a discernible gap in research on the detection of HPV using colposcopy images. The present study sought to fill this gap by introducing a novel methodology explicitly designed for HPV classification, thereby expanding the scope of research in medical imaging and cancer detection. Table 1 provides a comparison of existing work in which only colposcopy and neoplasia images are taken for classification.

Table 1

Comparison of existing work

| Author | Methodology | Results | Conclusions |

|---|---|---|---|

| Zhang et al. [4] | CAD approach, DenseNet CNN, ROI extraction, data augmentation | Accuracy: 73.08%, AUC: 0.75 | Successful automatic identification of cervical pre-cancerous lesions, distinguishing CIN2 or higher |

| Li et al. [5] | Deep learning framework, key-frame feature encoding, feature fusion, E-GCN | Classification accuracy: 78.33% | Efficient diagnosis of LSIL+ using time-lapsed colposcopic images. E-GCN proved to be suitable for feature fusion |

| Yu et al. [6] | Gated recurrent CNN, time series considerations, multistate cervical images merging | Accuracy: 96.87%, sensitivity: 95.68%, specificity: 98.72% | High accuracy in CIN grading using colposcopy images and time series considerations |

| Saini et al. [7] | ColpoNet architecture (enhanced DenseNet model) | Accuracy: 81.353% | Successful cervical cancer classification using the ColpoNet model |

| Xu et al. [8] | Two-way augmentation, CNN, SVM | Accuracy: 82.31% | Effective two-way augmentation for preprocessing, achieving high accuracy in cervical neoplasia classification |

| Cho et al. [9] | Image pre-processing, ResNet-15, five-way neoplasia classification | Accuracy: 51.7% | Neoplasia classification using ResNet-15, with a moderate accuracy of 51.7% |

About dataset

This dataset was sourced from the International Agency for Cancer Research (IACR) upon request. It contains samples of normal saline, acetic acid, acetic acid with a green filter, and Lugol’s iodine. In this study, samples after acetic acid treatment were considered.

Methodology

Image pre-processing

The dataset utilised in this research, obtained from IACR, comprises 2760 images categorised as either HPV positive or HPV negative. To effectively train the deep learning-based image classification model, 80% of the dataset was allocated for training, and the remaining 20% was reserved for testing. Acquiring a substantial volume of images, particularly within the specialised domain of colposcopy, presents a challenge. Consequently, the dataset underwent augmentation procedures, including flipping, rotation, contrast variation, brightness variation, random rotation, and translation, as listed in Table 2. These operations expanded the dataset five-fold by incorporating horizontal and vertical flips and left and right rotations. To address balance, adjustments were made to reach 690 samples for both HPV-positive and HPV-negative categories. The images were resized to 224 × 224 pixels in accordance with the preprocessing requirements of the model. The final dataset comprised 2760 samples, of which the proportion of HPV-positive and HPV-negative samples was balanced. The dataset provides an extensive and varied assortment that is well-suited for rigorous training and evaluation of the image classification model.

Feature extraction using EfficientNetB0

Feature extraction, a critical stage in data analysis, involves the conversion of unprocessed inputs into a format that is more comprehensible to users [10]. The extraction of HC features – image qualities that are precisely defined according to the particular attributes of the target region – is an inherent component of this procedure. Because of their straightforward extraction process, HC features have been widely employed by researchers, particularly in situations involving limited datasets. By employing these attributes, which were formulated in conjunction with domain specialists, a distinct training dataset was obtained, resulting in a visual representation that was more lucid than that of alternative attributes. An additional way to increase the desirability of particular attributes is to assign them varying degrees of importance in relation to the attainment of the goal. However, these characteristics may be difficult to discern when accompanied by complex visuals. This necessitates the implementation of an alternative approach to feature extraction, i.e. the application of deep learning models (DLMs). In particular, the significance of DLMs and CNNs has increased substantially over the past decade owing to advancements in processing capacity. This development has facilitated the training of more intricate networks, leading to an increase in the output while reducing the required time. Convolutional neural networks, which are employed as feature extractors, can extract features independently from input images. The versatility of the feature extraction methods is demonstrated by the utilisation of both handcrafted features and DLMs to meet the demands of various datasets and analytical purposes. To obtain optimal attributes using this method necessitates extensive training and considerable variation; however, the utilisation of DLMs offers the benefit of autonomously executing crucial preparatory tasks [11]. This image depicts the intricate design of EfficientNetB0. EfficiencyNet topologies optimise performance [12] by balancing scalability in accordance with available resources. In contrast to constructing EfficientNet topologies within a predetermined budget and subsequently adjusting them with additional resources, scalability is maintained for depth, breadth, and resolution. By adopting this methodology, the design could optimise the utilisation of its resources. Consequently, they erect succession of more intricate structures. The most fundamental form of architecture, EfficientNet-B0, incorporates 16 MobileNet [13] inverted bottleneck MBConv layers in 3 or 5 dimensions, following a convolution layer for feature extraction. Subsequently, fully linked, convolution, and pooling layers were implemented. EfficientNet-B0 streamlines and improves the training procedure by utilising its 5.3 million trainable parameters.

Classification using K-nearest neighbour

A basic neural network classifier assigns each pixel to the same class as the training data with the nearest intensity. However, this method may lead to errors if the nearest neighbour is an outlier in another class. To enhance the robustness of this approach, the KNN classifier was employed, where K patterns were considered. The κ-nearest neighbour classifier is categorised as a non-parametric classifier because it does not assume any specific statistical structure for the data [14]. The steps of the KNN algorithm comprise including classes in the training set, examining K items near the item to be classified, and placing the new item in the class with the highest number of nearby items. This process has a time complexity of O(q) for each tuple to be classified, where q represents the training set size. The κ-nearest neighbour classification utilises a training dataset with both the input and target variables. By comparing the test data, which only contain input variables, to this reference set, the distance of the unknown to its K-nearest neighbours determines its class assignment. This assignment can be achieved either by averaging the class numbers of the K-nearest reference points or by obtaining a majority vote for them [15].

Feature vectors are analogous to data points when the KNN algorithm is used to classify images. X represents the feature vector of the image requiring categorisation, whereas Xi denotes the feature vector of each individual image in the training set.

The Euclidean distance (d (X, Xi) is computed between X, and each Xi, using the formula:

In this instance, n represents the number of features in the image. The algorithm identifies K-nearest companions by selecting images with minimum distances. In the context of image classification, the class label for the image is determined by a majority vote among its nearest neighbours. The mathematical representation is as follows:

where the indicator function is denoted as I(.), yi is the class label of the ith neighbouring node, and c is a class label. An effective and versatile technique for picture classification that does not impose strict assumptions about the underlying data distribution is provided by this method, which enables the KNN classifier to classify an image according to the predominant class within its K-closest neighbours. Fine KNN is a kind of nearest-neighbour classifier that uses a single neighbour for classification, allowing it to make subtle differences between classes.

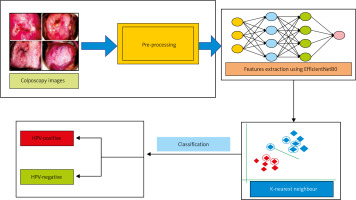

Colposcopy models were employed in a methodical fashion to categorise HPV images. An extensive dataset was initially compiled and subsequently augmented to ensure a robust model training and to enhance diversity. Following this, we trained the EfficientNetB0 architecture, which is renowned for its efficient data collection of pertinent information, to extract features from colposcopy images using the improved dataset. A comparative analysis of 19 distinct CNN configurations was conducted to ascertain the optimal CNN for the given task. The selection criteria for identifying a model that performs exceptionally well in HPV categorisation were determined by employing accuracy metrics. Following this, the chosen model was modified by utilising the KNN classification algorithm. Precise predictions were generated by identifying patterns within the feature space using this approach. A robust and precise HPV classification system was ensured through the implementation of this all-encompassing strategy, which incorporated data augmentation, effective feature extraction, model selection, and KNN algorithm fine- tuning. Figure 3 illustrates a simplified description of the proposed methodology.

Results

The described approach was executed on an HP Victus laptop equipped with a 12th generation Intel Core i7 CPU operating on a Windows 11 platform. The laptop featured an integrated NVIDIA graphics processing unit, and the analysis was performed using the MATLAB 2022a software. To evaluate various CNN classifiers, we examined their confusion matrix metrics, including accuracy, sensitivity, specificity, precision, false-positive rate, F1 score, Matthew’s correlation coefficient (MCC), and κ-values. The augmented dataset was randomly split into training (80%) and testing (20%) sets to assess model performance. To ensure the classifier’s ability to generalise to unseen patients, dataset splits were randomised. The results reported in this study represent the average outcomes of 10 independent runs. ROC curves were generated using the results of these 10 runs. Table 3 displays the performance metrics of 13 CNN models alongside the proposed model.

Table 3

Performance comparison of convolutional neural network models for classification of human papillomavirus in percentage

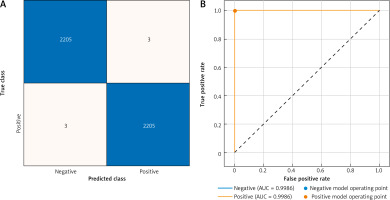

The confusion matrix and AUC for the validation dataset are presented in Figure 4. The results revealed an impressive accuracy of 99.9%, indicating exceptional classification performance of the model. Additionally, the AUC for validation was 99.86%, emphasising the robustness of the model in assessing the ROC curve. These metrics, displayed graphically, affirm the efficacy and reliability of the proposed methodology for HPV classification using colposcopy images.

Discussion

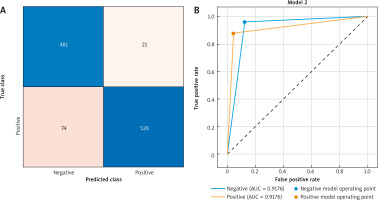

A concise overview of the performance of the model on the test dataset is presented in Figure 5, which incorporates the confusion matrix and AUC. The 91.4% accuracy rate of the model demonstrates its utility in the classification of assignments. Furthermore, the efficacy of the model in analysing the ROC curve was underscored by a test data AUC of 91.76%. The results are shown in Figure 5. These results demonstrated that the proposed method for classifying HPVs using colposcopy images is dependable and efficient, particularly when evaluated using fresh data.

Conclusions

To date, there have been no reports of cervical cancer diagnosed using HPV extracted from colposcopy images. However, this study addressed the feasibility of cervical cancer screening in rural areas of developing and low-income countries through the introduction of an innovative analytical framework that utilises colposcopy images to classify HPV. Colposcopy, which does not require biopsy specimens for processing and has a compact and cost- effective setup, is a feasible screening alternative, particularly in resource-constrained regions. The methodology of this research is characterised by a systematic approach, commencing with comprehensive dataset augmentation to enhance the robustness and breadth of the model. Following this, features were extracted using the efficient and well-known EfficientNetB0 architecture. This architectural design excels at gathering critical image attributes. Following the evaluation of 19 unique CNN architectures, we successfully identified a model that exhibited exceptional accuracy during the model selection procedure. The fine KNN method can be employed to refine the classification capabilities of the model, enabling indistinct distinctions between classes with only one neighbouring class. The proposed methodology yielded exceptional results, as shown by its 99.9% validation accuracy and 99.86% AUC. An AUC of 91.76% and an accuracy of 91.4% on the test data further demonstrated the excellent performance of the model. These compelling indicators prove that the integrated approach is effective and provides a reliable structure for HPV classification. The findings of this study offer promising evidence supporting the potential clinical application of this revolutionary methodology, thereby establishing a foundation for subsequent advancements in medical imaging. To enhance the practicality and impact of this ground-breaking approach across various therapeutic domains, subsequent endeavours should concentrate on empirical verification and further improvement.