Introduction

Upper-tract urothelial carcinoma (UTUC) is a relatively rare malignancy, which accounts for 5–10% of all urothelial cancers; however, its incidence has risen in the last decades [1, 2]. It has been suggested that the increase is caused by the ageing of the population and the development of diagnostic measures, including high resolution imaging, novel biomarkers and flexible ureterorenoscopes [1, 3, 4].

Globalisation and the widespread availability of the internet have led to a shift in the default source for seeking medical advice. According to the literature, the main sources of medical information utilised by the patients are print materials, the internet (especially internet forums), TV and family, as well as physicians’ consultations [5, 6].

Unfortunately, the rarity of UTUC may hinder the patients’ access to reliable information on the disease. Internet websites and forums often provide faulty data, which may be potentially harmful for the patients. Crucially, even healthcare professionals may be unfamiliar with the disease, causing patients’ anxiety. As UTUC is characterised by poor survival outcomes, it is important to provide reliable and understandable information in order to ensure psychological comfort and improve the compliance with treatment and follow-up protocols [7].

Nonetheless, within the last few years, a new possible information source has arisen. Large language models (LLMs), such as ChatGPT, powered by artificial intelligence (AI), are dynamically growing in popularity in the medical field. Even though AI is expected to assist with multiple tasks, LLMs can have limitations in medicine, as they can spread misinformation and reproduce authors’ bias [8]. Nowadays, AI chatbots are widely accessible, but patients might not be able to verify the correctness of LLMs’ responses.

This study aimed to evaluate the ChatGPT generated responses to patient-important questions regarding UTUC.

Material and methods

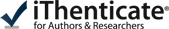

We searched for common questions asked by patients regarding UTUC. Websites, internet fora, and social media were analysed. Additionally, patients admitted to our department for UTUC were interviewed and their questions were recorded. Then, the 15 most relevant and often repeated inquiries were selected and assigned to 4 categories: general information; symptoms and diagnosis; treatment; and prognosis (Fig. 1, Table 1).

Table 1

List of included questions concerning upper tract urothelial carcinoma

These questions were entered into the AI chatbot ChatGPT 3.5 (OpenAI) in English language on the 5th of March 2024 and its responses were recorded without alterations. Each question was asked only once.

In order to evaluate the responses, a questionnaire was created. It included the 15 questions with ChatGPT responses and the marking areas. In every answer 5 criteria (adequate length, use of language comprehensible for the patient, precision in addressing the question, compliance with European Association of Urology (EAU) guidelines and safety of the response for the patient) were assessed using a numerical scale of 1–5 (with 5 as the best score). Moreover, the respondents were asked about their gender, age and experience in the field of urology.

Urologists with vast experience in urothelial cancer treatment were invited to anonymously and independently provide their scores for the ChatGPT answers.

A descriptive statistical analysis was conducted using Excel Version 16 (Microsoft Corporation, USA).

Results

Sixteen correctly filled questionnaires were included in the study. Thirteen of the respondents were attending urologists, while 3 were urology residents. 81.3% of the participants were male.

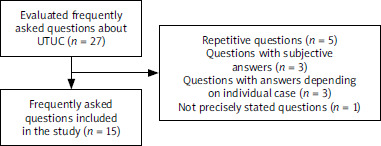

In the collective score distribution analysis, the following results were observed: a score of 5 was assigned 336 times (28.0%); a score of 4 – 527 times, (43.9%); a score of 3 – 268 ti- mes (22.3%); a score of 2 – 53 times (4.4%); and a score of 1 – 16 times (1.3%) (Fig. 2).

The average overall score was 3.93 (SD = 0.89). ChatGPT responses to each question received average scores ranging from 3.34 (SD = 1.18) to 4.18 (SD = 0.95) (Table 2). The question “Is it possible to cure UTUC without surgery?” received the lowest mean score. On the other hand, AI scored the highest on the question “What are the risk factors of UTUC?”

Table 2

Mean scores of the answers to the questions

Mean scores considering the division of the questions into 4 categories are presented in Table 3. ChatGPT responses regarding “general information” were graded the highest, with a mean score of 4.14 (SD = 0.80). “Symptoms and diagnosis”, as well as “prognosis” categories received average scores of 3.92 (SD = 0.93) and 3.91 (SD = 0.84) respectively. AI scored the lowest in the “treatment” category with a mean score of 3.68 (SD = 0.96).

Table 3

Mean scores of each question category

| Question category | Mean score (standard deviation) |

|---|---|

| General information | 4.14 (0.80) |

| Symptoms and diagnosis | 3.92 (0.93) |

| Treatment | 3.68 (0.96) |

| Prognosis | 3.91 (0.84) |

Average scores of the 5 assessed criteria are presented in Table 4. A mean score of 4.02 (SD = 0.83) was given for the use of comprehensible language and 4.02 (SD = 0.93) for the safety of the response for the patient. However, a few urologists considered several answers as unsafe for the patient, by scoring them 1 or 2 for this criterion. Regarding adequate length, compliance with EAU guidelines and precision in addressing the question, ChatGPT averaged 3.99 (SD = 0.86), 3.89 (SD = 0.84) and 3.72 (SD = 0.97), respectively.

Table 4

Mean scores of each of the 5 assessed criteria

Discussion

In this study we carried out a preliminary non-comparative analysis of the performance of ChatGPT in answering the questions regarding UTUC. Sixteen experienced physicians who specialise in UTUC subjectively marked the answers on a scale of 1–5 (5 being the best score). To date there is no published research on this particular topic. Nevertheless, the performance of AI in oncologic urology has recently been evaluated by a number of studies on different neoplasms, including renal cell carcinoma, bladder, prostate and testicular cancer [9–12].

Chatbot’s answers were most commonly graded 4, which does not deviate from the overall mean. However, scores of 1, 2 and 3 were given to 28.1% of the responses, showing suboptimal performance of ChatGPT.

In the present study the mean overall score of ChatGPT’s answers was 3.93. Therefore, it is clear that under no circumstances can ChatGPT substitute a medical professional as a primary source of knowledge on UTUC. Nevertheless, the score indicates that AI can provide patients with some basic information of mediocre quality.

The 15 responses of AI received scores of 3.34–4.18. The highest score was given to the question about risk factors of UTUC. This result is encouraging, as ChatGPT seems to address the public health and prophylaxis issue properly. Therefore, people who might not have urological cancer and do not visit a urologist might receive true information from the chatbot. On the other hand, the question whether it is possible to cure UTUC without surgery obtained the lowest score. It is worth noting that patients diagnosed with UTUC might use ChatGPT to look for alternative treatment methods and unverified cures for their disease, which can be extremely harmful.

The performance of ChatGPT varied between different categories of the questions. According to this study, patients would receive the best information on general knowledge about UTUC. In contrast, the worst performance of AI was recorded in the “treatment” category. Scores on symptoms, diagnosis and prognosis answers were very close to the overall mean. Therefore, ChatGPT might serve as a tool that explains the basics of UTUC to the patients. However, it should not be a primary resource in more advanced topics, especially regarding treatment of UTUC.

Finally, various aspects of the answers were assessed by the respondents based on 5 criteria: length, language, precision, compliance with EAU guidelines and safety. The most favourable scores were given for comprehensible language and the safety of the response for the patient. Nevertheless, we cannot classify ChatGPT as a safe tool, due to several 1 and 2 scores for this criterion. In order to avoid dangerous scenarios for the patient, information from ChatGPT should always be verified by a healthcare professional. Also, urologists thought that the chatbot’s answers were not precise enough, giving it the lowest score of all criteria.

Flaws in ChatGPT generated responses might be caused by the rarity of UTUC with scarce data on the disease and frequently changing guidelines that vary significantly depending on the publishing scientific association. Moreover, ChatGPT’s 3.5 database was last updated in January 2022, and it does not have access to real-time information. Therefore, it is not able to provide truly up-to-date responses on UTUC.

In the literature, similar articles about oncologic urology may be found. Ozgor et al. evaluated ChatGPT’s answers to frequently asked questions in topics regarding prostate, bladder, kidney and testicular cancers. The mean scores in this study were 4.4–4.5 out of 5. Moreover, in each cancer, 5 was the most commonly given score and the authors stated that ChatGPT is a valuable tool in addressing general questions on urological cancers. However, these answers were evaluated only by 3 physicians [9]. In another study on ChatGPT in prostate cancer, 2 urologists marked the answers 3.62 out of 5 on average [10]. Caglar et al. found that AI generated completely correct responses to 94.2% of the questions regarding prostate cancer [11]. However, only 76.2% of the questions prepared according to the strong recommendations of the EAU guideline were answered fully correctly [11]. Lastly, 70.8% of urologists thought that ChatGPT could not replace a urologist in answering questions regarding renal-cell carcinoma [12].

There is no consensus regarding ChatGPT’s accuracy in answering questions on various topics. Many studies presented more encouraging results than our research. Chatbot’s responses were completely accurate in 92%, 94.6% and 97.9% of the questions on paediatric urology, urolithiasis and andrology, respectively [13–15]. Moreover, AI was accurate in 96.9% of the inquiries about common cancer myths and misconceptions [16]. Ozgor et al. found that ChatGPT answered 91.4% of the questions about endometriosis correctly, with the worst performance in the “treatment” category [17]. On the other hand, AI’s responses regarding vaccination myths and misconceptions were accurate (85.4%), but one question was misinterpreted [18]. In addition, one study reported that 25% of ChatGPT’s responses on liver cancer were inaccurate and it did not provide reliable information on the topic [19]. Finally, Ali found that LLMs’ answers on lacrimal drainage disorders were suboptimal, with 40%, 35% and 25% graded as correct, partially correct and incorrect, respectively [20].

It is worth noting that all the analysed studies had heterogeneous methodologies with different numbers of questions and evaluators, as well as a variety of assessment scales. What is more, inquiries were not uniform in terms of difficulty. Finally, the results of the studies depended solely on the opinions of various experts. Therefore, it is not possible to compare these reports accurately.

Nonetheless, AI-based medical technologies are being rapidly developed and have the potential to improve multiple medical fields. Prostate cancer is an excellent example of the potential implementation of AI in urology. Numerous projects have been created where AI was able to facilitate pathological and radiological analysis in prostate cancer. Moreover, AI was used in genetic analysis and optimised radiotherapy of the carcinoma [21]. Certainly, in the future dedicated AI technologies will be invented for urological patients. We are sure that a chatbot trained specifically on verified data would provide excellent medical information for patients.

This study has some limitations that need to be disclosed. Firstly, the assessment of the questions was subjective and the number of respondents was low. Secondly, the study was non-comparative. Finally, potential variation in the structure of the questions and the version of ChatGPT might influence the quality of the responses.

Conclusions

ChatGPT does not provide fully adequate information on UTUC and inquiries regarding UTUC treatment can be misleading for the patients. What is more, in particular cases, patients might receive answers that are potentially unsafe.

However, ChatGPT can be used with caution to provide basic information regarding epidemiology and risk factors of the disease.